MATERIAL WITNESS

The evidential role of matter—when media records trace evidence of violence—explored through a series of cases drawn from Kosovo, Japan, Vietnam, and elsewhere.

In this book, Susan Schuppli introduces a new operative concept: material witness, an exploration of the evidential role of matter as both registering external events and exposing the practices and procedures that enable matter to bear witness. Organized in the format of a trial, Material Witness moves through a series of cases that provide insight into the ways in which materials become contested agents of dispute around which stake holders gather.

These cases include an extraordinary videotape documenting the massacre at Izbica, Kosovo, used as war crimes evidence against Slobodan Milošević; the telephonic transmission of an iconic photograph of a South Vietnamese girl fleeing an accidental napalm attack; radioactive contamination discovered in Canada's coastal waters five years after the accident at Fukushima Daiichi; and the ecological media or “disaster film” produced by the Deep Water Horizon oil spill in the Gulf of Mexico. Each highlights the degree to which a rearrangement of matter exposes the contingency of witnessing, raising questions about what can be known in relationship to that which is seen or sensed, about who or what is able to bestow meaning onto things, and about whose stories will be heeded or dismissed.

An artist-researcher, Schuppli offers an analysis that merges her creative sensibility with a forensic imagination rich in technical detail. Her goal is to relink the material world and its affordances with the aesthetic, the juridical, and the political.

Listen MIT Podcast︎︎︎

MIT Press︎︎︎

Andrew Barry, "Collective Empiricism and the Material Witness," Journal of Visual Culture 20, no. 3 (2022).

Reviewed, Mitchell Anderson, Art Monthly, Issue 459, 09.2022

Reviewed, Camille Roquet, Interfaces, 47, 2022

Reviewed, Lisa Deml, Third Text, 10.09.2021

The evidential role of matter—when media records trace evidence of violence—explored through a series of cases drawn from Kosovo, Japan, Vietnam, and elsewhere.

In this book, Susan Schuppli introduces a new operative concept: material witness, an exploration of the evidential role of matter as both registering external events and exposing the practices and procedures that enable matter to bear witness. Organized in the format of a trial, Material Witness moves through a series of cases that provide insight into the ways in which materials become contested agents of dispute around which stake holders gather.

These cases include an extraordinary videotape documenting the massacre at Izbica, Kosovo, used as war crimes evidence against Slobodan Milošević; the telephonic transmission of an iconic photograph of a South Vietnamese girl fleeing an accidental napalm attack; radioactive contamination discovered in Canada's coastal waters five years after the accident at Fukushima Daiichi; and the ecological media or “disaster film” produced by the Deep Water Horizon oil spill in the Gulf of Mexico. Each highlights the degree to which a rearrangement of matter exposes the contingency of witnessing, raising questions about what can be known in relationship to that which is seen or sensed, about who or what is able to bestow meaning onto things, and about whose stories will be heeded or dismissed.

An artist-researcher, Schuppli offers an analysis that merges her creative sensibility with a forensic imagination rich in technical detail. Her goal is to relink the material world and its affordances with the aesthetic, the juridical, and the political.

Listen MIT Podcast︎︎︎

MIT Press︎︎︎

Andrew Barry, "Collective Empiricism and the Material Witness," Journal of Visual Culture 20, no. 3 (2022).

Reviewed, Mitchell Anderson, Art Monthly, Issue 459, 09.2022

Reviewed, Camille Roquet, Interfaces, 47, 2022

Reviewed, Lisa Deml, Third Text, 10.09.2021

PUBLICATIONS BY SCHUPPLI

PUBLICATIONS BY SCHUPPLI

JUST ICE: Cold Rights in a Warming World (monograph in-progress)

Arctic Practices: Design for a Changing World. eds. Bert De Jonghe and Elise Misao Hunchuck, Actar Publishers, 2025

Susan Schuppli & Liz Thomas, "Ice Core Temporalities," in Evidence Ensembles, DNA #24, eds. Christoph Rosol Haus der Kulturen der Welt, Giulia Rispoli, Katrin Klingan, Niklas Hoffmann-Walbeck (Leipzig: Spector, 2024). Spector Books, Leipzig, Januay 2024

“Cryoception” in Sensing Elementality, Journal of Environmental Media Special issue, Intellect Books, Vol 5.1, 2024

“Sensing Ice: From Cryoception to the CryoSat” in Imagine Earth, Aarhus Univeristy & Louisiana Museum of Modern Art, 2023

Singing Ice: Ladakhi folk songs about mountains, glaciers, rivers, and steams, a book project with Morup Namgyal, Faiza Ahmad Khan, Radha Pandey, Jigmet Anjmo, British Council / Delhi India, 2022

“Learning from Ice: Notes from the Field by Susan Schuppli.”

In Fieldwork for Future Ecologies / Radical Practice for Art and Art-based Research. Eds. Bridget Crone, Sam Nightingale, Polly Stanton, Onomatopee 225, Eindhoven, 2022.

Chernobyl: The Image and Sound of Radioactivity Itself, The Wire (re-printed from MIT Press Reader), 01.03.2022.

Postcards from the Anthropocene: Unsettling the Geopolitics of Representation. Eds.Benek Cincik & Tiago Torres-Campos. DPR Barcelona, 2022.

“Reflections on Filming in Svalbard.” Creative Ecologies, PUBLIC 63, Toronto, 2022 (artist project).

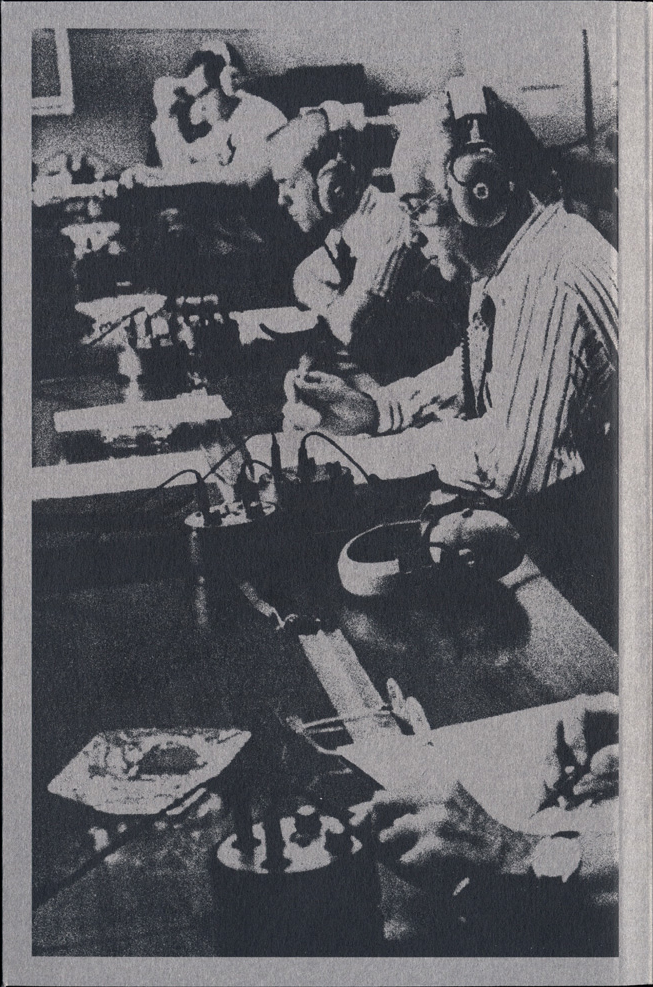

“Listening to Answering Machines.” Eavesdropping: A Reader. Eds. Joel Stern & James Parker. Melbourne: Liquid Architecture & Perimeter Books, 2019.

“Trace Evidence Trilogy”, Through Post-Atomic Eyes, Eds. Claudette Lauzon & John O’Brian, Montreal: McGill-Queen's University Press, 2019 (artist project).

“Should Videos of Trees have Standing? A Cultural History of Law in the Modern Age. Eds. Celermajer, Danielle and Richard Sherwin. London: Bloomsbury, 2018.

“Public Proxies” Public Studio / The Long Now. Art Gallery of York University, 2018.

Executing Practices. Eds. Helen Pritchard, Eric Snodgrass and Magda Tyżlik-Carver. Open Humanities Press, 2018. ISBN: 978-1-78542-056-6

“The Subterfuge of Screens.” Montreal: Musée d’art contemporain de Montréal, 2017.

“Computing the Law // Searching for Justice.” FORMER WEST: Art and the Contemporary after 1989. Eds. Buden, Hlavajova and Sheikh. Utrecht: BAK & London: MIT Press, 2017.

“Trace Evidence: A Nuclear Trilogy.” The Nuclear Culture Source Book. Ed. Carpenter, Ele. London: Black Dog Publishing in partnership with Bildmuseet, Sweden and Arts Catalyst, 2016.

“Dirty Pictures.” Living Earth Field Notes from the Dark Ecology Project 2014-2016. Eds. Belina, Mirna and Arie Altena. Amsterdam: Sonic Acts, 2016. 189-210.

“Infrastructural Violence: The Smooth Spaces of Terror.” Photographers Gallery London (2015).

“Slick Images: The Photogenic Surface of Disaster.” Allegory of the Cave Painting. Ed..Mirca & Van Gerven Oei. Extra City, Antwerp. Published by Mousse Milan, 2015.

“War Dialling: Image Transmissions from Saigon.” Mythologizing the Vietnam War. Eds. Good, Jennifer, et al. Cambridge: Cambridge Scholars Publishing, 2015.

“Law and Disorder.” Realism Materialism Art. Ed. Christoph Cox, Jenny Jaskey, Suhail Malik. Berlin: Sternberg Press, (2015); 137-43.

“Radical Contact Prints”. Camera Atomica, ed. John O’Brian, Art Gallery of Ontario, Toronto, Canada. Blackdog Publishing, (2014): 277-291.

“Deadly Algorithms: Can Legal Codes hold Software accountable for Code that Kills?” Radical Philosophy, Issue 187 UK, (2014): 2-8.

Truth is Concrete: A Handbook for Artistic Strategies in Real Politics Ed. Florian Malzacher. Berlin: Sternberg Press, (2014): 221-223.

“Can the Sun Lie”, “Entering Evidence”, “Uneasy Listening”. Essay contributions to Forensis: The Architecture of Public Truth. Ed. Forensic Architecture, Berlin: Sternberg Press, (2014): 56-64, 279-314, 381-392.

“Atmospheric Correction.” On the Verge of Photography. Eds. Rubinstein, Golding and Fisher. Birmingham: Birmingham Article Press, (2013): 16-32.

“Walk-Back Technology: Dusting for Fingerprints and Tracking Digital Footprints.” Photographies (Routledge) Helsinki Photomedia.6.1 (2013): 159-167.

“The Most Dangerous Film in the World (Reprint).” Materialities. Ed. Gutfranski, Krzysztof. Gdańsk: Wyspa Progress Foundation / Wyspa Institute of Art from Gdańsk, (2013): 241-272.

“Probative Pictures: Image Proofs in Errol Morris’s Standard Operating Procedure.” CV Ciel Variable Ed. Vincent Lavoie.91 Contemporary Art and Forensic Imagination (2013): 20-28.

“A Memorial in Exile in London’s Olympics: Orbits of Responsibility.” Open Democracy (2012).

“Impure Matter: A Forensics of WTC Dust.” Savage Objects. Ed. Pereira, Godofredo. Portugal: Imprensa Nacional Casa da Moeda (2012): 120-140.

“Material Malfeasance: Trace Evidence of Violence in Three Image-Acts.” Photoworks Issue 17 (November 2011-April 2012): 28-33.

“Tape 342: That Dangerous Supplement.” Cabinet Forensics.43 (2011): 86-89.

“Forensic Architecture.” Weizman, Tavares, Schuppli, Situ Studio. Post-Traumatic Urbanism, Architectural Design, Eds. Lahoud, Adrian and Charles Rice. 80.5 (2010): 58-63.

“Improvised Explosive Designs: The Film-Set as Military Set-Up.” Borderlands 9.2 (2010): 1-18.

“Oral Affliction or Archival Aphasia.” Memory Studies 2.2 (2008): 167-86.

“Of Moths and Machines.” Cosmos & History: The Journal of Natural and Social Philosophy 4.1-2 (2008): 286-306.

Guest Editor. “Exposé 67: Special Issue on Expo 67.” Winter Edition Vol. 2-22. Saskatoon: Blackflash, 2004-05.